Underwater human-robot communication remains an open challenge, particularly when robots are required to accept instructions from only a few select individuals wearing similar outfits. Previous solutions [1], [2] leverage different swimming patterns to uniquely identify different scuba divers, however, these algorithms are inapplicable for close-proximity interactions as they require the entire body to be visible and in motion.

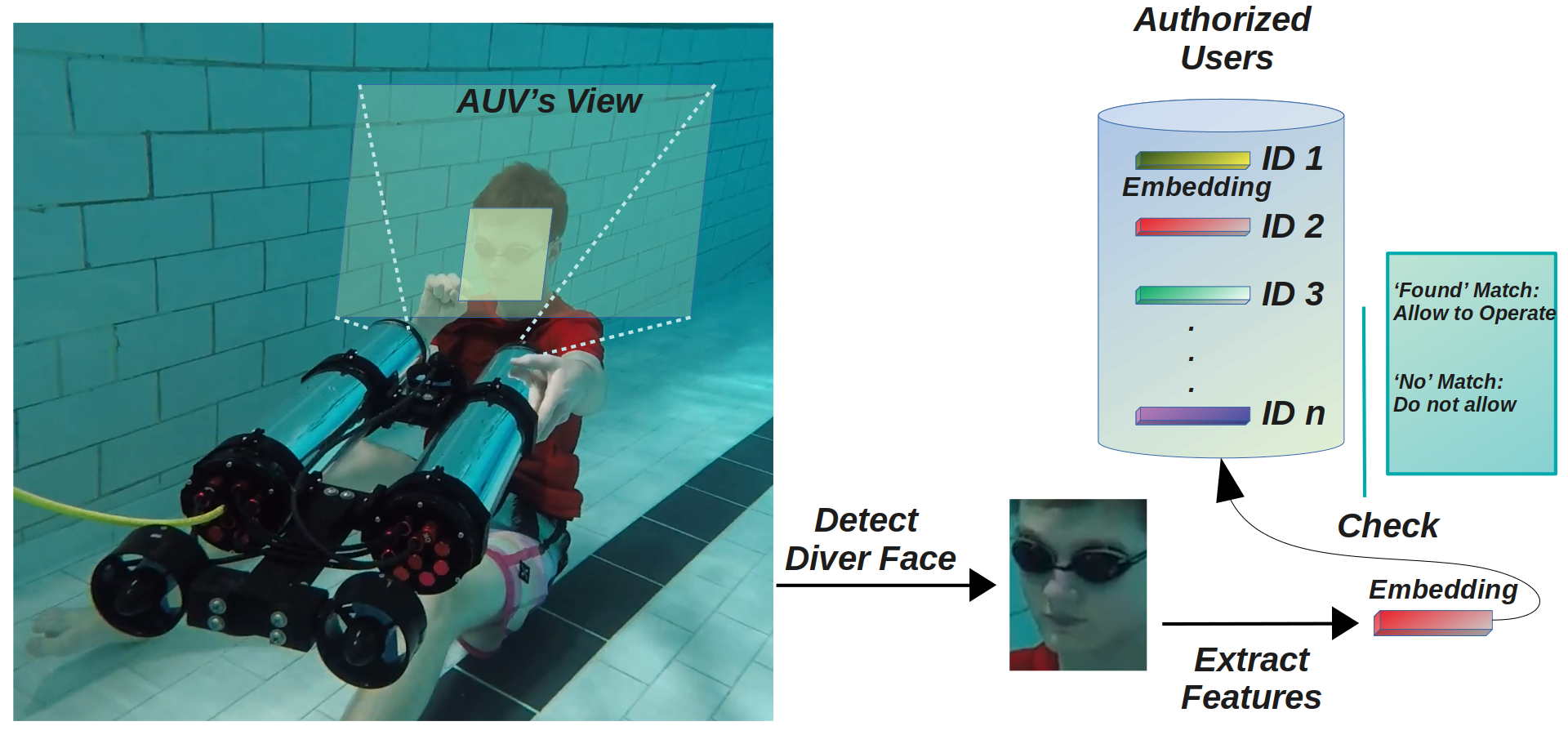

To this end, we propose a framework to identify scuba divers underwater using facial recognition. A diver face is usually heavily obscured by scuba masks and breathing apparatus. As a result, state-of-the-art face recognition algorithms [3], [4] struggle to extract robust features from the diver faces. We address this issue by developing a data augmentation technique to create realistic diver faces from regular (non-diver) faces and construct a dataset that includes both diver and non-diver faces. We use this dataset to learn highly discriminative features representing the diver faces. Then, we create a database which holds all the authorized users' diver face representations which are extracted using the previously learnt model which can be queried during real-time inference. Fig. 1 demonstrates the framework.

Fig. 1: Demonstration of a diver face identification system for underwater human-robot interaction. The framework detects diver faces underwater and extracts discriminative feature embeddings which are matched against pre-computed embeddings of authorized users stored in a database.

We validate the effectiveness of the proposed system through qualitative and quantitative experiments and compare the results with several state-of-the-art algorithms' performances. We also analyze the practical feasibility of this framework for mobile platforms. Detailed information can be found in the paper.

Paper (Preprint): https://arxiv.org/pdf/2011.09556.pdf

References:

[1] Y. Xia and J. Sattar, “Visual Diver Recognition for Underwater Human-Robot Collaboration,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 6839–6845.

[2] K. de Langis and J. Sattar, “Realtime Multi-Diver Tracking and Re-identification for Underwater Human-Robot Collaboration,” in 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020, pp. 11 140–11 146.

[3] J. Deng, J. Guo, N. Xue, and S. Zafeiriou, “ArcFace: Additive Angular Margin Loss for Deep Face Recognition,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 4685–4694.

[4] H. Wang, Y. Wang, Z. Zhou, X. Ji, D. Gong, J. Zhou, Z. Li, and W. Liu, “CosFace: Large Margin Cosine Loss for Deep Face Recognition,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 5265–5274.