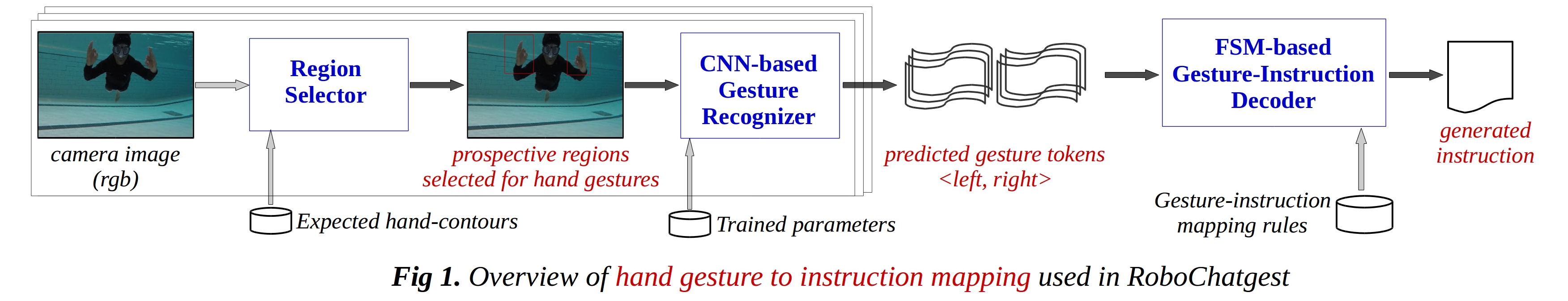

RoboChatGest introduces a real-time programming and parameter reconfiguration method for autonomous underwater robots using a set of intuitive and meaningful hand gestures. It is a syntactically simple framework that is computationally more efficient than a complex, grammar-based approach.

The major components are:

- A simple set of 'hand-gestures to instruction' mapping rules

- A 'region selection' mechanism to detect prospective hand gestures in the image-space

- A Convolutional NN model for robust hand gesture recognition

- A Finite State Machine to efficiently decode complete instructions from the sequence of gestures

The key aspect of this framework is that it can be easily adopted by divers for communicating simple instructions to underwater robots without using artificial tags such as fiducial markers or requiring them to memorize a potentially complex set of language rules.

|

Important pointers:

|