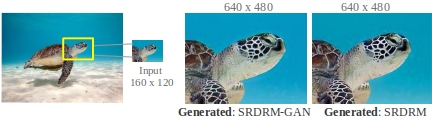

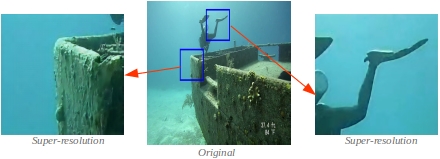

We present a fully-convolutional deep residual network-based generative model for single image super-resolution underwater (SISR), which we refer to as SRDRM. We also formulate an adversarial training pipeline (i.e., SRDM-GAN) by designing a multi-modal objective function that evaluates the perceptual image quality based on its global content, color, and local style information. In our implementation, SRDRM and SRDM-GAN can learn to generate 640x480 images from respective inputs of size 320x240, 80x60, or 160x120. Additionally, we present USR-248, a large-scale dataset that contains paired instances for supervised training of 2x, 4x, or 8x SISR models.

We validate the effectiveness of SRDRM and SRDRM-GAN through qualitative and quantitative experiments and compare the results with several state-of-the-art models' performances. We also analyze their practical feasibility for applications such as scene understanding and attention modeling in noisy visual conditions. Detailed information can be found in the paper; also, check out this GitHub repository for the models and associated training pipelines.

|

Important pointers:

|